New Report: Risky Analysis: Assessing and Improving AI Governance Tools

An international review of AI Governance Tools and suggestions for pathways forward

Kate Kaye Pam Dixon

December 2023

Download

↓ PDF The Full Report

Index

- Brief Summary of Report

- About the Authors

- About The World Privacy Forum

- Executive Summary: Why the World Privacy Forum Conducted This Research

- Background and Introduction

- Methodology

- Findings

- Part I. Discussion: Critical Analysis of AI Governance Tools

- Measuring AI Fairness Measures

- Use Cases in AI Fairness

- Pathways for Building an Evaluation Environment and Creating Improvements in the AI Governance Tools Ecosystem

- PART II: A Survey of AI Governance Tools and Other Notable AI Governance Efforts from around the World

- Intergovernmental Organization Toolkits and Use Cases from International and Regional Multilateral Institutions

- Regional Development Banks

- AI Governance Tools and Use Cases from National Governments and NGOs

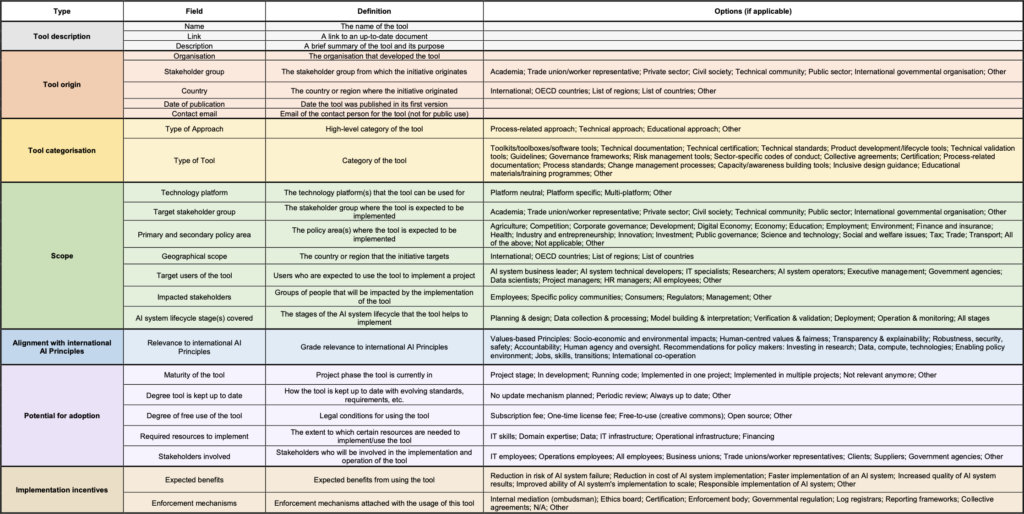

- Appendix B: AI Governance Tools and Features Comparison Chart

- Appendix C: Some AI Governance Tools Feature Off-label, Unsuitable, or Out-of-context Uses of Measurement Methods

- Appendix D: OECD Catalog of Tools and Metrics Framework

Brief Summary of Report

AI systems should not be deployed without simultaneously evaluating the potential adverse impacts of such systems and mitigating their risks. Most of the world agrees about the need to take precautions against the threats posed by AI systems. Tools and techniques exist to evaluate and measure AI systems for their inclusiveness, fairness, explainability, privacy, safety and other trustworthiness issues. These tools and techniques – called here collectively AI governance tools – can improve such issues. While some AI governance tools provide reassurance to the public and to regulators, the tools too often lack meaningful oversight and quality assessments. Incomplete or ineffective AI governance tools can create a false sense of confidence, cause unintended problems, and generally undermine the promise of AI systems. This report addresses the need for improved AI governance tools.

It is the goal of this research to help gather evidence that will assist in the building of a more reliable body of AI governance tools. This report analyses, investigates, and appraises AI governance tools, including practical guidance, self assessment questionnaires, process frameworks, technical frameworks, technical code, and software disseminated in Africa, Asia, North America, Europe, South America, Australia and New Zealand. The report also analyzes existing frameworks, such as data governance and privacy, and how they integrate into the AI ecosystem. In addition to an extensive survey of AI governance tools, the research presents use cases discussing the contours of specific risks. The research and analysis for this report connects many layers of the AI ecosystem, including policy, standards, scholarly and technical literature, government regulations, and best practices.

Our work found that AI governance tools used in most regions of the world for measuring and reducing risks and negative impacts of AI could introduce novel, unintended problems or create a false sense of confidence unless accompanied by evaluation and measurement of those tools and their effectiveness and accuracy.

In this report we suggest pathways for creating a healthy AI governance tools environment, and offer suggestions for governments, multilateral organizations, and others creating or publishing AI governance tools. These suggestions include best practices taken from existing AI and other quality assessment standards and practices already in widespread use. Appropriate procedural and administrative controls include: 1) providing AI governance tool documentation and contextualization, review, audit, and other quality assurance procedures to prevent integration of inappropriate or ineffective methods in policy guidance; 2) identifying and preventing conflicts of interest; and 3) ensuring that capabilities and functionality of AI governance tools align with policy goals. If governments, multilateral institutions, and others working with or creating AI governance tools can incorporate lessons learned from other mature fields such as data governance and quality assessment, the result will establish a healthier body of AI governance tools, and over time, healthier and more trustworthy AI ecosystems.

About the Authors

(Listed alphabetically)

Pam Dixon is the founder and executive director of the World Privacy Forum, a respected nonprofit, non-partisan, public interest research group. An author and researcher, she has written influential studies in the area of identity, AI, health, and complex data ecosystems and their governance for more than 20 years. Dixon has worked extensively on data governance and privacy across multiple jurisdictions, including the US, India, Africa, Asia, the EU, and additional jurisdictions. Her field research on India’s Aadhaar identity ecosystem, peer-reviewed and published in Nature Springer, was cited in India’s landmark Aadhaar Privacy Supreme Court opinion. Dixon currently serves as the co-chair of the UN Statistics Data Governance and Legal Frameworks working group, and is co-chair of WHO’s Research, Academic, and Technical network. At OECD, Dixon is a member of the OECD.AI Network of Experts and serves in multiple expert groups, including the AI Futures group. In prior work at OECD, Dixon was part of the original AI expert group that crafted the OECD AI Principles, which were ratified in 2019. Dixon has presented her work on complex data ecosystems governance to the The National Academies of Sciences, Engineering, and Medicine and to the Royal Academies of Science. She is the author of nine books and numerous studies and articles, and she serves on the editorial board of the Journal of Technology Science, a Harvard-based publication. Dixon was named one of the most influential global experts in digital identity in 2021. Dixon received the Electronic Frontier Foundation Pioneer Award in 2021 for her ongoing oeuvre of groundbreaking research regarding privacy and data ecosystems.

Kate Kaye, deputy director of the World Privacy Forum, is a researcher, author, and award-winning journalist. She is a member of the OECD.AI Network of Experts, where she contributes to the Expert Group on AI Risk and Accountability. In addition to her extensive research and reporting on data and AI, Kate is the recipient of the Montreal AI Ethics Institute Research Internship, and was a member of UNHCR’s Hive Data Advisory Board.

Editor: Robert Gellman

Data Visualizations and Report Design: John Emerson

About The World Privacy Forum

The World Privacy Forum is a respected NGO and non-partisan public interest research group focused on conducting research and analysis in the area of privacy and complex data ecosystems and their governance, including in the areas of identity, AI, health, and others. WPF works extensively on privacy and governance across multiple jurisdictions, including the US, India, Africa, Asia, the EU, and additional jurisdictions. For more than 20 years WPF has written in-depth, influential studies, including groundbreaking research regarding systemic medical identity theft, India’s Aadhaar identity ecosystem —peer-reviewed work which was cited in the landmark Aadhaar Privacy Opinion of the Indian Supreme Court — and The Scoring of America, an early and influential report on machine learning and consumer scores. WPF co-chairs the UN Statistics Data Governance and Legal Frameworks working group, and is co-chair of the WHO Research, Academia, and Technical Constituency. At OECD, WPF researchers participate in the OECD.AI AI Expert Groups, among other activities. WPF participated as part of the first core group of AI experts that collaborated to write the OECD Recommendation on Artificial Intelligence, now widely viewed as the leading normative principles regarding AI. WPF research on complex data ecosystems governance has been presented at the National Academies of Science and the Royal Academies of Science. World Privacy Forum: https://www.worldprivacyforum.org.1

Risky Analysis: Assessing and Improving AI Governance Tools

An international review of AI Governance Tools and suggestions for pathways forward

World Privacy Forum

© Copyright 2023 Kate Kaye, Author; Pam Dixon, Author; Robert Gellman, Editor; John Emerson, Designer.

Cover and design by John Emerson

All rights reserved.

EBook/Digital: ISBN: 978-0-9914500-2-2

Publication Date: November 2023

Nothing in this material constitutes legal advice.

This report is available free of charge at: https://www.worldprivacyforum.org/2023/12/risky-analysis-assessing-and-improving-ai-governance-tools

Updates to the report will be made at : https://www.worldprivacyforum.org/2023/12/risky-analysis-assessing-and-improving-ai-governance-tools

Acknowledgements

The World Privacy Forum extends our gratitude to the following people who reviewed and/or were interviewed for this report:

Report Reviewers

(Listed alphabetically)

Ryan Calo, Lane Powell and D. Wayne Gittinger professor, University of Washington School of Law; adjunct professor, UW’s Paul G. Allen School of Computer Science and Engineering; and faculty co-founder, UW Tech Policy Lab

Abhishek Gupta, founder and principal researcher at the Montreal AI Ethics Institute

Cristina Pombo Rivera, principal advisor and fAIr LAC coordinator, Social Sector, Inter-American Development Bank

Jason Tamara Widjaja, director of artificial intelligence and responsible AI lead, Singapore Tech Center, MSD

Report Sources

(Listed alphabetically)

Dr. Rumman Chowdhury, CEO, Humane Intelligence

Maria Paz Hermosilla Cornejo, director of GobLab UAI, in the School of Government at Adolfo Ibáñez University, Chile

Abigail Jacobs, assistant professor of information, School of Information, and assistant professor of complex systems, College of Literature, Science, and the Arts, University of Michigan

Lizzie Kumar, PhD candidate in computer science at the Brown University Center for Tech Responsibility

Tim Miller, professor in artificial intelligence at the School of Electrical Engineering and Computer Science at The University of Queensland

Luca Nannini, PhD student in the Information Technology Research PhD program at CiTIUS of the University of Santiago de Compostela, Spain

Ndapewa Onyothi Wilhelmina Nekoto, research and community lead at the Masakhane Research Foundation

Abigail Oppong, independent researcher and Masakhane Research Foundation contributor

Eike Petersen, postdoctoral researcher, DTU Compute, Technical University of Denmark

Cristina Pombo Rivera, principal advisor and fAIr LAC coordinator, Social Sector, Inter-American Development Bank

Cynthia Rudin, Earl D. McLean, Jr. professor of Computer Science and Electrical and Computer Engineering, Duke University

Ian Rutherford, statistician, United Nations Statistics Division

Jason Tamara Widjaja, director of artificial intelligence and responsible AI lead, Singapore Tech Center, MSD (known as Merck and Co. in the US and Canada).

Jane K. Winn, professor of law, University of Washington School of Law

Executive Summary: Why the World Privacy Forum Conducted This Research

The World Privacy Forum conducted the research, writing, and background work necessary to complete this report to address the risks posed by profound changes and advances in the AI ecosystem. These changes and advances impact people, groups of people, and communities, and require evidence-based policy responses as soon as possible. Currently, there is a meaningful lack of evidence regarding how to implement and ensure trustworthy AI; this is true for older AI systems, and it is also true for newer, more advanced AI systems.

This report is intended to begin building much-needed evidence and procedures regarding how to implement trustworthy AI by analyzing AI governance tools and their functions. This is a critically important task because AI governance tools form a pivotal component of AI systems and their lifecycle. This report defines AI governance tools as:

“Socio-technical tools for mapping, measuring, or managing AI systems and their risks in a manner that operationalizes or implements trustworthy AI.”2

This report also documents what these tools do, where they are located and used, their range of maturity, some of the specific risks they pose, the practices currently in place in relation to these tools, and initial steps to take to begin creating improvements.

AI governance tools are important as an area of focus because they sit at the implementation layer of the AI ecosystem and operate across AI system types. AI governance tools, when they function well, can assist the people, businesses, governments, and organizations implementing AI or researching AI to delve into various aspects of how AI models are functioning, and if they are performing in expected or intended ways. For example, some AI governance tools are meant to measure fairness or to “de-bias” AI systems. Some AI governance tools are meant to explain AI system outputs. And some AI governance tools are designed to measure and improve system robustness, among other tasks. However, when AI governance tools do not function well, they can exacerbate existing problems with AI systems.

The timing of this report is noteworthy. In 2007, WPF began work on an extensively researched report on machine learning and its impacts, The Scoring of America. Published in 2014, the report articulated the problems with the deep machine learning of the time and discussed why policymakers needed to address problems with bias, transparency, interpretability, fairness, and other issues. It would have been impossible to know that in just a few years, groundbreaking research introducing a new approach to AI network architecture3 would begin to evolve AI and its capabilities in novel ways.4 In a sense, WPF’s 2014 publication marked the last years of an earlier deep learning AI era. In contrast, this report, Risky Analysis—while building on WPF’s earlier AI work—sits at the start of a burgeoning new era in AI.

As such, Risky Analysis is a different kind of report. It documents the existing evidence regarding AI governance tools with an intent to begin building the larger evidentiary repository needed to create an evaluation environment that supports a transparent and healthy body of AI tools, which will in turn facilitate a healthier AI ecosystem. WPF intends to continue building on this work. For these reasons, WPF is treating this report as a living document, which WPF will update on a regular basis.

Research Conducted for This Report: Building an Evidence Base Regarding AI Governance Tools

This report surveys the international landscape of AI governance tools and provides an early evidentiary foundation that documents multiple aspects of these tools. The report focuses on AI governance tools published by multilateral organizations and by governments. The report utilizes the evidence from the survey of tools in conjunction with in-depth case studies and scholarly literature review to construct a lexicon of AI governance tool types. Based on the evidence gathered, these tool types include: practical guidance, self assessment questionnaires, process frameworks, technical frameworks, technical code, and software.

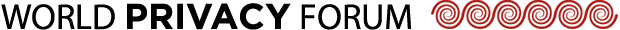

Figure 1: AI Governance Tool Types Lexicon

| Practical Guidance | Includes general educational information, practical guidance, or other consideration factors |

| Self-assessment Questions | Includes assessment questions or detailed questionnaire |

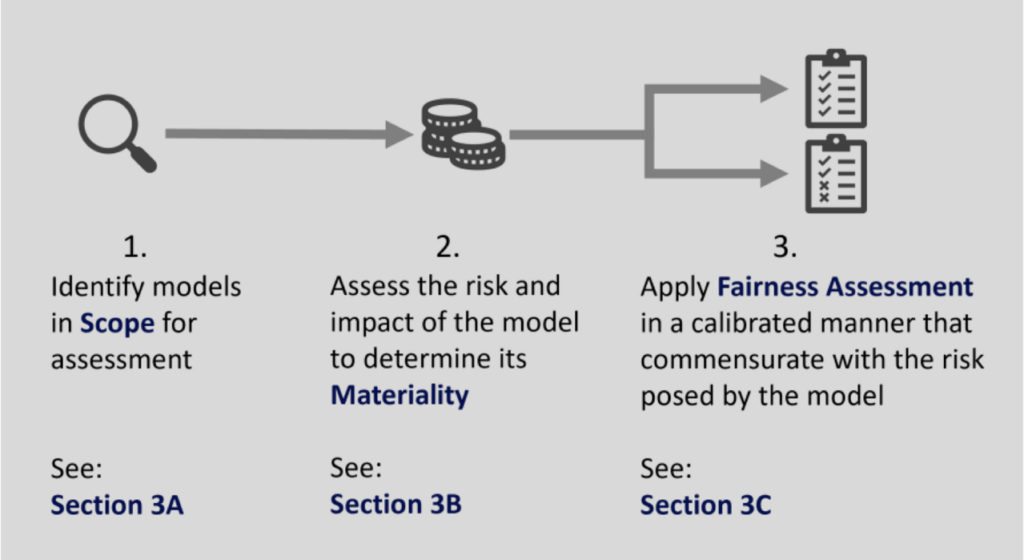

| Procedural Framework | Includes process steps or suggested workflow for AI system assessments and/or improvements |

| Technical Framework | Includes technical methods or detailed technical process guidance or steps |

| Technical Code or Software | Includes technical methods, including use of specific code or software |

| Scoring or Classification Output | Includes criteria for determining a classification, or a mechanism for producing a quantifiable score or rating reflecting a particular aspect of an AI system |

(Source: World Privacy Forum, Research: Kate Kaye, Pam Dixon. Image: John Emerson). For more information regarding methodology guiding the evaluation of AI governance tools and Finding 2—Some AI governance tools feature off-label, unsuitable, or out-of-context uses of measurement methods—see Appendix C.

- The survey of AI governance tools in this report includes tools from each region. Some examples include:

- An updated process for acquisition of public sector AI from Chile’s public procurement directorate, ChileCompra

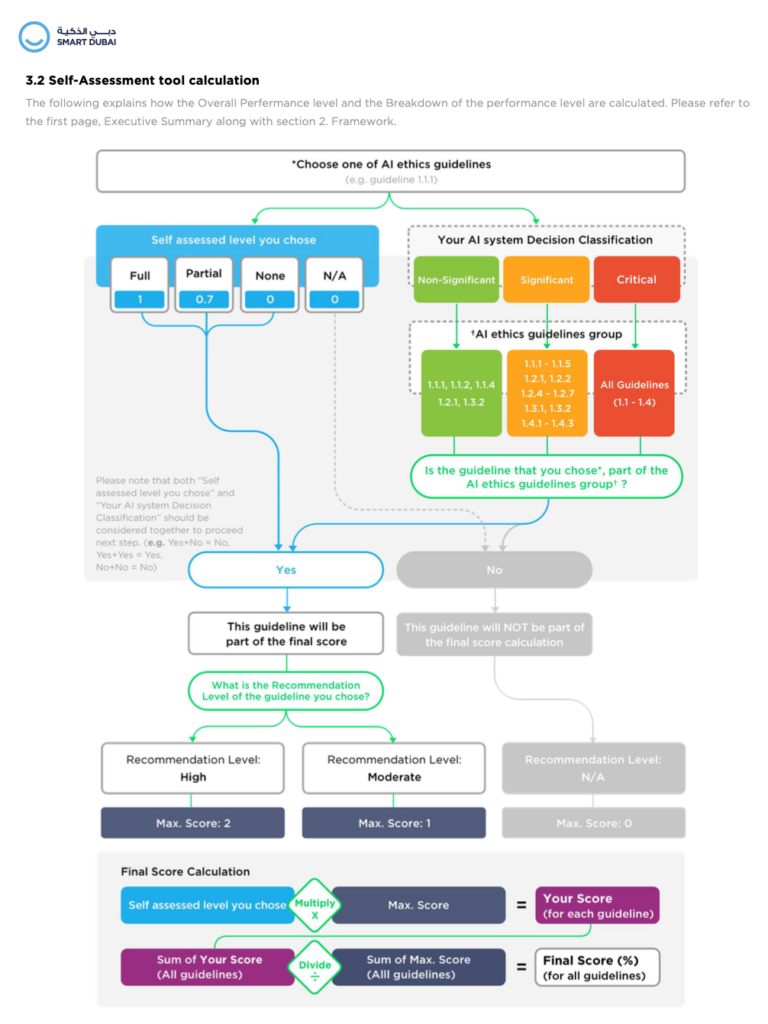

- Self-assessment-based scoring systems from the Governments of Canada and Dubai and Kwame Nkrumah University of Science and Technology in Ghana

- Software and a technical testing framework from Singapore’s Infocomm Media Development Authority

- An AI risk management framework from the US National Institute of Standards and Technology (NIST)

- A culturally-sensitive process for reducing risk and protecting data privacy throughout the lifecycle of an algorithm from New Zealand’s Ministry for Social Development

- A vast repository of AI governance tool types from The Organization of Economic Cooperation and Development (OECD), a multilateral institution

The report also includes two detailed case studies in AI fairness and explainability and provides suggestions for how to begin building an evidence basis for an AI governance ecosystem. Going forward, AI governance tools, when fit for purpose, can help provide better health at the implementation layer of AI. AI governance tools are nascent, flexible, and when designed and applied for their intended purpose, can improve the health of the AI ecosystem. However, much more work needs to be done to build the evidentiary basis to create an evaluation environment for this ecosystem.

This report analyzes and discusses the existing governance structures in place today that are intended to protect privacy and govern large data ecosystems by facilitating effective and trustworthy management of data flows. This report discusses how data privacy and governance regulations that were installed as recently as 10 years ago no longer retain the same fit and effectiveness in some AI environments, particularly in environments where advanced versions of AI are in use. The research and analysis conducted for this report indicates that we do not yet know what will be effective replacement or evolutionary structures to protect privacy and govern data in an advanced AI era. It is essential to gather the evidence now for what will work, and to develop an AI governance ecosystem based on this and other evidence.

The report research includes analysis of what could be helpful to create improvements in the AI ecosystem. The research found that there are many unknowns regarding AI governance tools, and what standards, methods, or measurements could best be applied to create transparency and a basis for AI that is trustworthy. The research found hopeful avenues and places to start; these include use of the Plan-Do-Study/Check-Act cycle to improve management of AI governance tools and working to improve documentation of AI governance tools. The report also suggests an adaptation of an early AI governance tools framework from the OECD to provide improved gatekeeper functions for entities that publish collections of AI governance tools.

Going Forward

The research for Risky Analysis indicates that an evaluative environment in which AI governance tools can be tested, matured, and validated will require an evidentiary foundation. The work to create this foundation is just beginning and will require multistakeholder cooperation. The World Privacy Forum is committed to continuing to do the work necessary to gather and analyze the evidence that will facilitate the building of an AI governance ecosystem that is based on evidence, protects privacy and other values, is trustworthy, and provides a genuine foundation for regulatory structures and implementation practices that are fit for purpose today and for the coming AI era.

Background and Introduction

AI governance tools are important because they can map, measure, and manage complex AI governance challenges, particularly at the level of practical implementation. The tools are intended to remove bias from AI systems,5 or increase the explainability of AI systems, among other tasks. Seeking an orderly, automated way of solving complex problems in AI systems can create efficiencies. But those same efficiencies, if not well-understood and appropriately constrained, can themselves exacerbate existing problems in systems and in some cases create new ones. This is the case with AI governance tools, an important and nascent part of AI ecosystems which this report defines as:

AI Governance Tools:

Socio-technical tools for mapping, measuring, or managing AI systems and their risks in a manner that operationalizes or implements trustworthy AI.6 7

An AI governance tool can be used to evaluate, score, audit, classify, or improve an AI system, its decision outputs, or the impacts of those outputs. These tools come in many forms. This report classifies AI governance tools in the following categories: practical guidance, self assessment questionnaires, process frameworks, technical frameworks, technical code, and software.

While AI governance tools offer the promise of improving the understanding of various aspects of AI systems or their implementations, not all AI governance tools accomplish the goals of mapping, measuring, or managing AI systems and their risks, which we argue are essential features of an effective AI governance tool. Further,

given the lack of systematic guidance, procedures, or oversight for their context, use, and interpretation, AI governance tools can be utilized improperly or out of context, creating the potential for errors ranging from small to significant.

For example, AI governance tools can be used in novel or “off-label”8 ways, which can lead to meaningful errors in contextualization and interpretation. Some of the more complex AI governance tools can create additional risk by producing a rating or score that in and of itself can be subject to error or misinterpretation, especially if there is a lack of documentation and guidance for use of the tool. All told, flawed usage and interpretation can result in a gap between what people want these tools to accomplish, and what these tools actually do accomplish.

Lessons learned from earlier AI policy implementations

AI governance tools can exacerbate risk when there are gaps in controls and standards for the tools, their context of use, and other items, such as their outputs or results. The many types of AI governance tools can range from simple questionnaires all the way to software. Therefore, the manner in which some AI governance tools are used to map, measure, and manage the risks of AI systems can differ substantially. The quality of an AI governance tool and any quantifications it produces matters greatly. For instance, an AI output in the form of a score can seem deceptively simple to interpret. However, AI score outputs are historically notorious for requiring great care and proper contextualization to accurately interpret and apply.9 This is an important area to understand because challenges with today’s AI governance tool outputs, which are in some cases scores, reflect a long history of broader AI scoring approaches (of which there are many) that for decades have been studied, understood, and in some cases, regulated.10

Some AI governance tools, such as those intended to remove bias from an AI system, may provide a score or rating to indicate the prominence or presence of certain biases. Not all AI governance tools produce quantified measures or scores. However, in such cases that they do produce scores, it will be necessary to properly interpret the score, and to validate the score for the specific context in which it is used with objective criteria. Accomplishing these kinds of tasks consistently across the full body of AI governance tools and tool types requires a range of policy guidance from informal technical and policy guidance to formal legal guidance or regulation, depending on the tool being used, its output, and the context and purpose of its use.

Using examples from the classical AI machine learning context, some countries regulate AI systems that specifically impact decisions related to eligibility, including AI systems that automate decision-making associated with credit reporting. AI systems trained to analyze credit eligibility often produce a credit score as an output. As mentioned, some of these systems currently have regulations in place. This is true across multiple jurisdictions.11 Most credit scoring regulations are intended to provide transparency regarding automated decisions, error correction, and redress, among other features of credit scoring systems. In regulated scoring models, scores are evaluated for fit, accuracy, and other factors based on the evidence. Evaluative techniques and processes guide proper implementation of the scoring systems.12 There is enough history and established policy around credit scoring systems and their risks, that credit scores, their use, and their interpretation is well-understood. For example, errors or problems introduced by flaws in either the data, the analysis, or even the implementation or interpretation of the scoring can create meaningful impacts for people, groups of people, and communities.13

Credit scoring systems and regulations provide many lessons. However, there is an additional policy lesson here beyond just the importance of regulating credit scoring: many thousands of other AI scoring systems exist, and most of these are not formally regulated.14 In fact, regulated scores are rare. The Scoring of America analyzed many kinds of AI scores beyond credit scoring—from patient frailty scores to consumer prominence scores indicating purchasing power to identity scores used to quantify the validity of an identity.15

The 2014 Scoring report found that unregulated AI scores of that time operated a lot like analytical plumbing, humming along in the background of many business processes. These scores are plentiful and largely unseen, but nevertheless could have an impact on bias, fairness, privacy, transparency, and other issues. The lack of broader policy action regarding the various AI scoring systems that were not in scope of credit scoring regulation resulted in widespread and opaque use of a variety of scores spanning a range of risk levels.

Today, AI governance tools are in an intriguingly similar position in that they, too, are increasingly common and are poised to become a critical part of the evolution of the “AI analytical plumbing,” despite the fact that they are often not subject to evidence-based assessments or regulation. Even though AI governance tools are nascent and largely unregulated, they are already in widespread use across the world, and across sectors. For example, some AI governance tools are already in use in eligibility contexts, such as to measure AI systems used in relation to employment. While legal scholars who research these fields know about some of these tools, their uses, and their risks, the knowledge is not yet widespread.16

Additionally, because AI governance tools are often made available with minimal documentation and have little to no regulation, these tools exist in various stages of quality assurance. Many new AI governance tools are in development today. But their risks, and the policies that will address these risks consistently are largely not yet well-developed, or in some cases, not present at all. Given the beneficial potential of AI governance tools,17 it is worth meaningful efforts to understand more about them, how they operate in today’s AI environments, and how the AI governance tools environment can be improved while incorporating existing legal and policy guardrails from other regulatory regimes.

Addressing policy disruptions stemming from old and new forms of AI: incorporating lessons learned from the data governance and privacy domain

Human rights, privacy, and data governance laws and policies are currently in various stages of change as a result of AI. Some older AI systems that emerged in past decades have had regulatory oversight in place for many years, for example, credit scoring models were regulated beginning as early as the 1970s. However, the emergence of advanced AI models 18 is creating novel disruptions, and questions abound about what new regulations for newer models should look like. Even though existing approaches are not necessarily responsive to the changes in AI or are being bypassed, there is still much to be learned from the past history of data governance and privacy.

We begin with a discussion of terminology. Data governance and privacy are related, but they are not interchangeable. Data governance is a comprehensive approach to the entirety of data of an organization or entity that ensures the information is managed through the full data lifecycle. This can include data collection practices, data security, quality, documentation, classification, lineage, cataloging, auditing, sharing, and other aspects. Data privacy is a subset of data governance and is best defined in context as forms of protecting either personal data, or the personal data of a group of people. Privacy is often seen in terms of individual data rights, such as the right to deletion, and so forth. While the conception of privacy as an individual right is currently ascendant in terms of legislation today,19 conceptions of privacy as a group or community-based privacy right are emerging as well, and can be found, for example, in Māori approaches to privacy.20

In response to changes in technology, new opportunities, and other developments, data governance policy, laws, and institutions were introduced, developed, and adjusted mightily over the decades. These evolutions were driven by an urgent need to respond to then-radical changes in technology and governance policy. The impetus was to provide responses to a variety of emerging threats and opportunities related first to the emergence of the computer, and later, to the emergence of the Internet and subsequent factors, such as the emergence of social media platforms.

In the late 1960s, attention to data governance, data protection, and privacy began slowly, with small developments here and there around the world. Responding to the growth of personal computing, countries enacted different privacy laws beginning in the 1970s and 1980s.21 It did not take long before the differences and limits in these national laws created problems with international data flows. Europe began to address these problems, and the EU, after some significant effort, adopted a Data Protection Directive 22 in the 1990s. The shortcomings of the Directive and the challenges with its implementation resulted in its replacement by the EU General Data Protection Regulation23 which has been enforced since 2018. Many other countries around the world now follow the EU privacy model. There is no question GDPR forms a near-worldwide regulatory structure.24

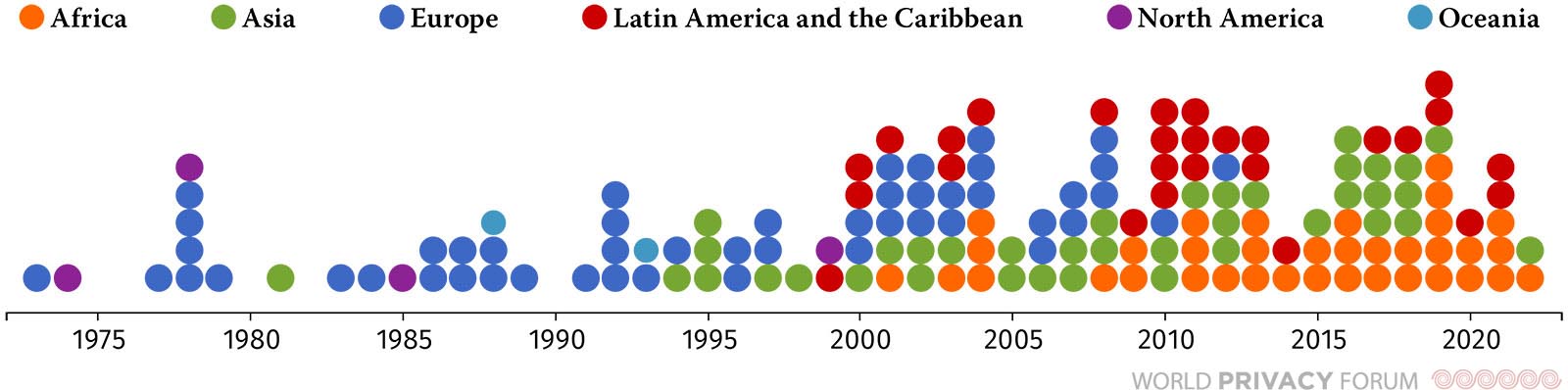

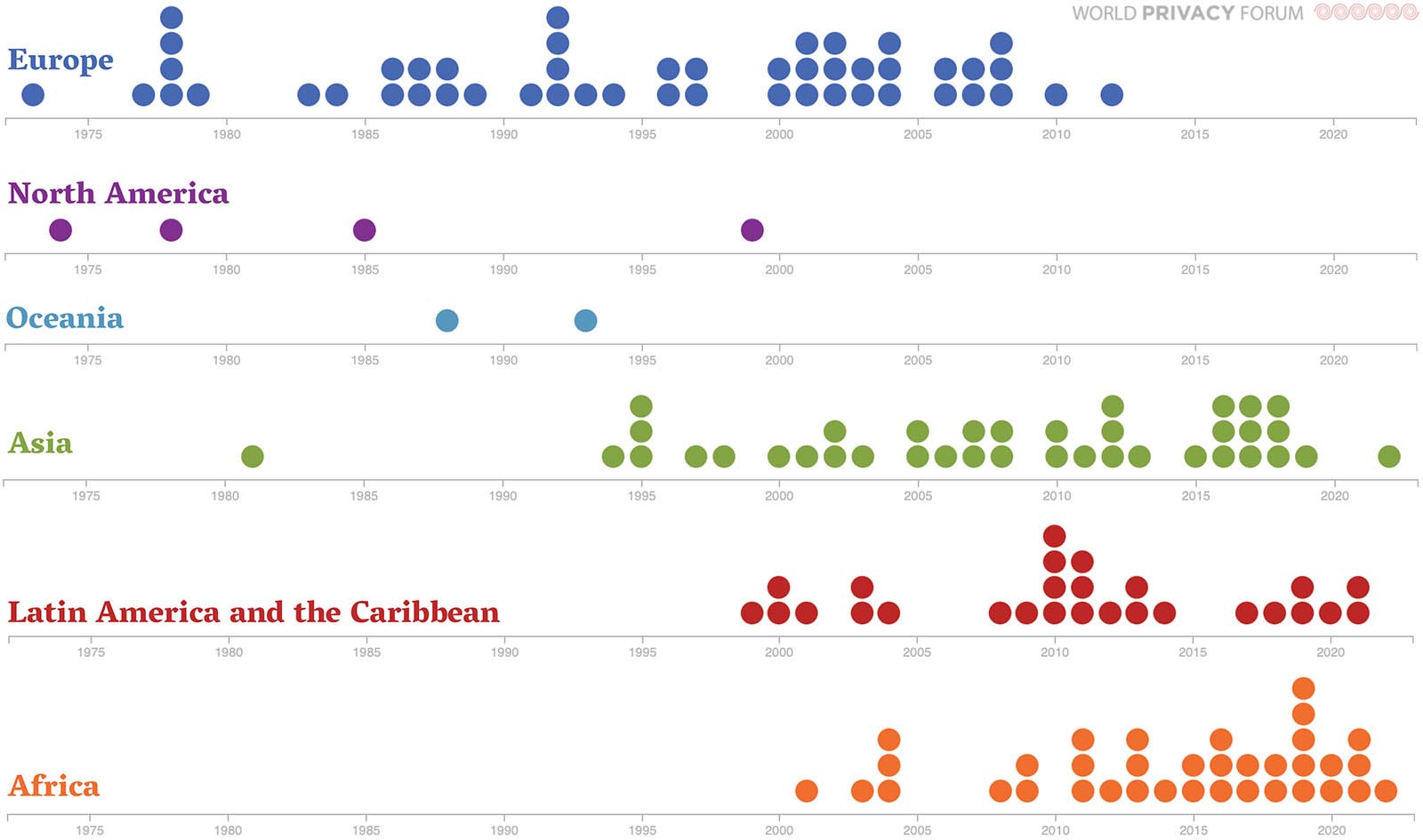

See for example, Figures 1 and 2, which visualize the distribution of data governance and data protection laws across the world. Notice the distinct patterns of distribution of data protection laws through global regions over time.

Figure 2: Table of Global Privacy Laws

(Source: World Privacy Forum. Research: Pam Dixon, Kate Kaye. Data Visualization: John Emerson.)

Figure 3: Table of Global Privacy Laws, Regional Breakout

(Source: World Privacy Forum. Research: Pam Dixon, Kate Kaye. Data Visualization: John Emerson.)

For decades data policy and technical developments came about more or less at approximately the same time, albeit with some delay, and at a much slower pace. For example, at about the 20-year mark of early credit model development, credit score models were regulated.25 These first major credit system regulations developed in the 1970s, at the beginning of a series of worldwide data governance and privacy developments that then unfolded in incremental steps over decades.26

Fair Information Practices (FIPs),27 the core statement of data governance and privacy values started in 1973 in the United States, was restated by the Organization of Economic Cooperation and Development (OECD) in 1980,28 and became the basis for many privacy laws and policies around the world. Eventually, FIPs faded into the background, not because the policies were wrong, but because the general policies that served so well for so long were not specific enough to address ongoing developments in technology, industry, and government. To offer one example, FIPs did not call for privacy agencies, but countries quickly recognized the value of privacy agencies or data protection authorities, and the idea spread around the world. Data protection authorities function as enforcers of data protection and governance laws, and help guide the implementation data governance ecosystems at the ground level effectively.29

As privacy laws and institutions matured, it became clear over time that solutions which had seemed responsive in theory did not always work well in practice, or, sometimes, ideas that worked in one jurisdiction or social context did not fit in others. For example, GDPR and GDPR-like legislation, which grew from the 1995 EU Data Protection Directive, both of which focused on individual privacy rights, does not always fit well in some contexts, including Indigenous contexts, where privacy and data are often handled as community rights.30 31 Additional ideas from jurisdictions and stakeholders came along. There was a lot of experimentation, which created an evidentiary basis over time. The data governance and privacy learning curve stretched over decades, and the various stakeholders in the data ecosystems are still learning. Similarly, AI regulations have been moving along for the most part in incremental steps.32

In contrast to the long evolution of data governance and privacy laws and norms, advanced forms of AI, though in development since 2017, jumped to public awareness seemingly overnight in 2022.33 The presence of newer and more advanced AI models brought quick regulatory reactions and proposals that in some cases derogated from decades of established knowledge and lessons. Quick response is on the whole hopeful. However, responses will need to ensure there is input from all stakeholders, and ensure that existing legal and other guardrails, including existing human rights and privacy guardrails, are integrated. One lesson from data governance and privacy is history is that it takes time to understand what works and what does not.

It is worth recalling that various forms of machine learning have been used and regulated for many decades. As discussed, credit score regulations—which address data inputs, algorithms, set points, and other aspects of machine learning—have existed in some jurisdictions since the 1970s. These early forms of machine learning regulations often include well-understood governance mechanisms that are common today, such as error correction, a formal dispute process, extensive government oversight, and other forms of consumer redress. The procedural, and administrative controls used in these types of regulations were new at one time, but are now international standards. These standards, norms, and older governance models enshrined into law need to be taken into full account by those seeking to address the risks of emerging advanced AI systems.

AI governance tools hold out great promise for mapping, measuring, and managing new AI risks. Work to address how AI governance tools can be managed competently with appropriate and helpful guardrails is important, and will entail building the necessary evidence and measurement environments to facilitate this work. Without an evidentiary basis for policy, we are all just making guesses, which is not sufficient to address the actual risks the developing AI ecosystem may have.

The importance of acknowledging what we do not yet know

The more advanced forms of AI that are in place introduce novel problems and new architectures, and these may require a range of new governance approaches. Necessary responses include changes in the way privacy, human rights, and data governance are operationalized and implemented in AI and other ecosystems.

This is new territory, and sufficient evidence regarding valid and fit-for-purpose governance of these systems does not exist yet in a world filled with AI activities. We simply do not how privacy and data governance models must adjust to remain effective. There is a continuing need to construct evidence-based models of privacy and data governance and to use these as a basis to respond to the new challenges and opportunities introduced by advanced AI models.

At the same time, governments, academics, companies, and others have jumped into what appears to be a high-speed race to regulate AI. The reaction appears somewhat instantaneous, especially in comparison to the long ramp that data privacy and data governance policy experienced. It appears that the socio- technical and policy responses to AI will not have the luxury of developing slowly over decades, or perhaps even years. Regulations of varying quality, validation level, and enforceability will be coming soon. This contrasts to the environment of a decade ago when AI governance was not seen as needing immediate action.

As discussed, many of the concerns at the heart of data governance and protection policymaking – fairness, due process, discrimination, openness, rights and responsibilities of data controllers and data subjects, and limits on data use and disclosure – apply to AI activities that include processing of information about individuals, and increasingly, about groups of people and communities. Evidence built up over time shows what works and does not for these systems. However, some of the older governance models are too narrow to address the full range of issues today. Yet some of the newer AI governance models proposed today lack the deep policy knowledge and experience from recent decades.

The world is beginning to acknowledge the substantial impacts of modern AI on privacy, fairness, bias, transparency, and other values. However, there is not yet enough evidence to enable an analysis of what those full range of impacts might be, or how AI activities will develop.

It is critically important to engage in humility regarding what we do and do not know about advanced AI models.34 We must ensure that evidence gathering and better understanding precede a rush to solutions — regulation must relate to the reality on the ground. We can accomplish a better result through testing and validation of the technical and policy systems at hand. Otherwise, regulation may well be unfit for purpose and fail to accomplish the goals which are vitally important to attain.

Creating a healthier AI ecosystem and fit-for-purpose guardrails: Toward building an evidence-based AI governance tools environment to operationalize and implement trustworthy AI goals

AI governance tools hold great promise to create improvements at the implementation level. They can function as implementation interfaces for AI systems and ecosystems, and they are already in widespread distribution internationally. This report provides an international survey of the tools as they exist today, a detailed analysis of these tools and their benefits and risks, as well as how they operate in various contexts.The report suggests several concrete pathways for improving outcomes and ensuring that AI governance tools, which are intended to improve AI systems, do just that.

While this report is specifically focused on AI governance tools as an important body of tools to create improvements in AI systems, many additional pathways to improvement regarding more broadly defined AI systems exist, and these possible pathways can be experimented with. Privacy Impact Assessments (PIAs), now commonplace across the world, could be helpful. PIAs were developed in the mid-1990’s and reached maturity around 2005-2009. PIA development is still undergoing ongoing cycles of improvement.35 However, PIAs alone will not be enough to address the full range of challenges that AI presents. Additional tools will be needed.

For example, the development of ethical or trustworthy AI Impact Assessments is already underway.36 37A high-quality and verifiable AI impact assessment that evaluates impacts and validity is essential for those relying on machine learning models and AI system outputs.38 The need for assessment is especially urgent for those models and systems that support decisions about patient health; matters pertaining to employment and other eligibility-related or eligibility-adjacent decisions; law enforcement and criminal justice decisions; and other activities directly affecting the lives of individuals, groups of individuals, and communities.

Recognize, however, that assessments for privacy, human rights, certain aspects of governance, and other assessments and validation in relation to today’s AI activities are far from mature. And even the most perfect assessment tools will not be enough to address the full range of challenges through the AI lifecycle. More will be needed to address all of the aspects of the lifecycle, including implementation through AI governance tools, and this work has barely begun in regards to addressing advanced forms of AI.

Methodology

This report surveys AI governance tools with to assess how widespread they are, where they are located, and how they might—or might not—improve AI systems. This research examines two use cases in depth plus additional smaller exemplars.

To conduct the preliminary work for this report, the first part of the research sought to determine: 1) how widespread AI governance tools are; 2) where they are published geographically or virtually; 3) what entities created them; 4) the goals of the tools; and 5) the extent to which the tools met their stated objectives. To assess these questions, we sampled a limited set of AI governance tools across jurisdictions and organizations.

This research also analyzed scholarly literature that assesses the quality, functionality and applicability of AI governance tools. The analysis encompasses literature published between 2017 and 2023 that analyzes AI fairness and explainability tools. The research for this report concluded Oct. 31, 2023. Only a few minor updates were added after that time.

An extensive comparative study and analysis of similar fields to determine existing norms and standards for documentation, quality assessment, testing, ongoing monitoring, and other aspects of assessment and post-market improvement cycles was conducted, as well as multiple interviews with experts in the AI field.

This report adopts the term AI Governance Tools and introduces a lexicon of AI Governance Tool Types. The research conducted for Part II of this report made it clear that there was a need to clarify and distinguish among the items commonly labeled generically as “AI Tool” or “AI Toolkit” in the international AI policy and governance sphere.

For more information regarding methodology guiding the evaluation of AI governance tools and Finding 2: Some AI governance tools feature off-label, unsuitable, or out-of-context uses of measurement methods, see Appendix C.

Findings

1. AI governance tools are widely published and offered by governments, multilateral institutions, and other organizations.

AI governance tools exist across Africa, Asia, Europe, North America, South America, and Oceania (Australia and New Zealand), at varying levels of maturity and dispersion. Governments, multilateral organizations, academia, civil society, business, and others utilize these tools in different types of AI implementations.39 This research focuses on AI governance tools used, promoted, or cataloged primarily by governments and multilateral institutions, especially those tools that seek to implement principles of trustworthy AI.40 It remains difficult to quantify precisely how many tools exist.

2. Some AI governance tools feature off-label, unsuitable, or out-of-context uses of measurement methods.

More than 38% of AI governance tools reviewed in this report either mention, recommend, or incorporate at least one of three measures shown in scholarly literature to be problematic. These include off-label, unsuitable, or out-of-context applications when used to measure AI systems.41

3. AI Governance Tool providers and hosts have important gatekeeper and quality assurance roles.

Some collections of AI governance tools are published online. This research focused on tools and tool catalogs published by governments and multilateral organizations. These tools and tool catalogs vary significantly in size and types of offerings. Some are comprised of a listing of an AI governance tool with little to no additional information. Some tool collections go further and provide certain levels of assessment or at least a detailed description of the tools.

The OECD, for example, publishes a catalog of AI governance tools that is among the largest offered to date.42 The OECD framework for its tool catalog may become a helpful model going forward. For example, at least 12 items featured in the OECD’s Catalogue of AI Tools and Metrics either mention, recommend, or incorporate off-label measures discussed in Part I of this report, which features use cases of problematic AI fairness and explainability measures. Tool catalog hosts and publishers have important roles as gatekeepers with responsibilities to ensure tool quality and transparency.

[Hed 2} Secondary Findings

1. Standards and guidance for quality assessment and assurance of AI governance tools do not appear to be consistent across the AI ecosystem.

This research did not seek to determine as a primary goal whether quality assessments are in place for each AI governance tool or tool catalog. However, it became apparent during the research process that while some AI governance tool providers have conducted some quality assessments of those tools, some have not; if they do conduct quality assessments, AI governance tool providers do not always conduct them according to an internationally recognized standard.

Complete product labeling, documentation, provision for user feedback, requirements for testing, or provision of redress in the case of problems are important features of traditional products, but these features are not always present in AI governance tools.

[Hed 2 } Pathways for Improvements: Summary

The following is a high-level summary of the solutions and steps that will begin to address the problems and opportunities the research for this report identified. At the end of Part I of this report, a section titled Pathways for Building an Evaluation Environment and Creating Improvements discusses in detail potential pathways and solutions toward improving the AI governance tools environment.

Establishing an Evaluation Environment for AI Governance Tools

There is not enough data yet about how AI governance tools interface with specific standards. As a result, foundational work needs to be done to build an evaluative AI governance tools environment that facilitates validation, transparency, and other measurements. Establishing an evaluation environment for AI governance tools will be crucial to create a healthy AI governance tools ecosystem, and more broadly, a healthier AI ecosystem.

In considering what might help build a transparent, evaluative environment for AI governance tools, the application of international and other standards holds potential. For example, the extensive quality assurance ecosystem articulated in formal standards and norms is well-understood across many mature sectors.

Although many established standards already exist and are important to acknowledge, currently, there is limited knowledge about the functionality of these standards as applied to AI governance tools. Testing of available tools would improve understanding of how existing standards might apply, and it would also support the ecosystem based on evidence. The Plan-Do-Check (or Study)-Act cycle will be a key tool to assist in this maturation.

Establishing Baseline Requirements for Documentation and Labeling of AI Governance Tools:

The research found high variability in the documentation and labeling of AI governance tools. This suggests that developing norms regarding documentation and labeling of AI governance tools could produce meaningful levels of improvements. For example, it would be helpful if tools routinely include information about the developer, date of release, results of any validation or quality assurance testing, and instructions on the contexts in which the methods should or should not be used. A privacy and data policy is also important and should be included in the documentation of AI governance tools.

- Additional items can be provided in the documentation, for example:

- Appropriate performance metrics for validity and reliability

- Documentation should provide the suggested context for the use of an AI governance tool. AI systems are about context, which is important when it comes to applicable uses, environment, and user interactions. A concern is that tools originally designed for application in one use case or context may potentially be used in an inappropriate context or use case or “off-label” manner due to lack of guidance for the end user.

- Documentation should give end users an idea of how simple or complex it would be to utilize a given AI governance tool.

- Cost analysis for utilizing the method: How much would it cost to use the tool and validate the results?

- A data policy: A detailed data policy should be posted in conjunction with each AI governance tool. For example, if applicable, this information could include the kind of data used to create the tool, if data is collected or used in the operation of the tool, and if that information is used for further AI model training, analysis, or other purposes.

- Complaint and feedback mechanism: AI governance tools should provide a mechanism to collect feedback from users.

- Cycle of continuous improvement: Developers of AI governance tools should maintain and update the tools at a reasonable pace.

- Conflict of interest: The identities of those who financed, resourced, provided, and published AI governance tools should be made public in a prominent manner in conjunction with publication or distribution of the tool.

The crucial role of NIST and the OECD in convening stakeholders and developing an evaluative environment and multistakeholder consensus procedures for high-quality AI governance tools and catalogs

The National Institute of Standards and Technology (NIST) could play an additional role in AI by building an environment in which an evidentiary basis for the socio-technical contexts and best practices for AI governance tools could be created. WPF urges NIST to undertake this work, including developing recommendations for a process for developing, evaluating, and using AI governance tools.

The OECD could play an additional role in AI by creating a definitive best-practice framework for AI governance tool or tool catalog publishers by further developing and refining its existing work in this area. This report includes initial suggestions for this work in the Pathways for Building an Evaluation Environment and Creating Improvements discussion at the end of Part I., This builds on OECD’s existing work on a framework for AI governance tools. WPF urges the OECD to gather international stakeholders to further this work.

Measurement Modeling: A structured Approach Aligning AI Governance Tools and Policy Goals

Measurement modeling could play a positive role in improving outcomes and the quality of AI governance tools. A shorthand for understanding measurement modeling is that it is a structured method that can be used to illuminate gaps between the actual results of measurement systems and policy goals.43

When embedded in policy rules and guidance, specific methods or metrics for building more fair, accountable and transparent AI systems and gauging AI risks can have a lasting impact on the ways society comprehends AI systems and their effects on people’s lives. What and how we measure something44 not only reflects our understanding of it, but imposes frameworks or structures for our future understanding.

Measurement modeling is one approach that can assist in this process.45 For example, measurement modeling has been proposed as a method for recognizing gaps in relation to fairness gaps in computational systems. 46 47

Measurement modeling essentially asks evaluators to distinguish between what or how a metric or tool measures, and the goals of the measurement. In other words, is there proper alignment between a metric or tool and the goals of policy? Does the metric or tool actually measure for the same things the policy aims to achieve? The method might be applied when vetting or validating AI governance tools or metrics used to gauge AI fairness, for example.

Some researchers have devised an audit framework for assessing the validity and stability of specific measures such as personality testing metrics used in automated hiring systems. For instance, a socio-technical algorithmic auditing framework found that two real-world personality prediction systems showed “substantial instability with respect to key facets of measurement, and hence cannot be considered valid testing instruments.”48

This and other frameworks for assessing measurement methods could be helpful to policymakers as they inspect AI governance tools.

Going forward, ensuring alignment of AI governance tools with policy goals for trustworthy AI will be of the utmost importance. For this reason, it will be helpful to assess underlying assumptions about what the measurement mechanisms or methods used in AI governance tools actually do. This very issue is at the heart of the next section of the report, which covers detailed use cases of AI governance tools in their implementation contexts.

Part I. Discussion: Critical Analysis of AI Governance Tools

Governments from Australia to Singapore, Ghana to India, and Europe to the US, have begun to put AI principles into practice by presenting methods for measuring and improving the impacts of AI systems through a variety of AI governance tools. The overwhelming majority of AI governance tools reviewed in this report emphasize two particular governance goals among many49: 1.) fairness, or avoidance of bias and discrimination in AI-based decisions and outputs, and 2.) explainability, or interpretability of those systems.50

The impulse to operationalize AI principles by measuring and improving AI impacts is a positive one, which will ideally guide users, developers, and other actors on a path toward more beneficial and trustworthy AI systems. Measuring the world around us is one way humans make sense of it. It’s only natural that people want to quantify fairness, explainability, and other aspects of AI.

The measures established today could have lasting effects on how the impacts of AI are reflected and interpreted for years to come. Today’s measurements will form the foundation of risk scores, consumer scores,51 ratings, and other statistics we rely on to help make sense of these systems and enforce the rules and regulations addressing them. No one wants to standardize ill-suited methods or embed them in policy in ways that could introduce new problems or harms. That’s why it is so important to make sure measurement approaches align with policy goals.

Part II of this report reviews and analyzes a wide-ranging group of more than 30 AI governance tools and adjacent guidance distributed in 13 national jurisdictions across a number of regions. This section’s focus is intentionally narrowed to encompass literature that analyzes AI fairness and explainability tools. There is a wealth of relevant literature from scholars in technical and socio-technical fields published between 2017 and 2023. This rich and growing body of work investigates a variety of approaches to measuring and improving AI fairness and explainability. Put simply, it seeks to “measure the measures.”

The literature cited and reviewed in this section paints a vivid portrait of what could go wrong if AI governance tools are applied without rigorous evaluation or in inappropriate contexts. This body of literature questions some commonly-used approaches for assessing or improving AI fairness or explainability.52 It shows that AI measurement methods can lead to AI system accuracy failures, unintentional harms to individuals or groups, or manipulation of metrics to produce tainted measurement outcomes.

Scholars interviewed for this report generally agree that the mission to guide AI actors toward developing and operating more trustworthy AI systems through AI governance tools is beneficial. However, their work may not always be known to policymakers or others influencing those tools. As noted in this report’s findings, some AI governance tools mention, recommend, or incorporate off-label uses of potentially faulty or ill-suited tools that are scrutinized in the growing body of scholarly literature.53 Our intention is to point out salient areas in which the scholarly research we’ve reviewed might inform future AI governance and AI governance tools.

Measuring AI Fairness Measures

A considerable body of research has emerged in recent years highlighting the potential problems when AI actors use automated AI governance tools that promise to create systems that are more fair,54 but do so without properly assessing those methods or their applicability to a chosen purpose. For example, particularly in the past few years, scholars from around the world have raised alarms about application of metrics that do not align with specific AI fairness-related tasks, such as measuring bias in a dataset used to train an AI model or assessing the risk of unfair decisions made by an AI system.

Recent research intended to elucidate basic requirements of appropriate fairness metrics suggests that “the choice of the most appropriate metrics to consider will always be application-dependent.” This scholarly literature finds that assessment of a risk model’s fairness in itself is crucial because such models are used to inform human decision-makers.55

Governments are just beginning to recognize the need to assess methods intended to improve AI fairness. For example, as discussed in Part II of this report, Chile’s 2022 bidding and quality assurance requirements for government acquisition of AI systems stress the importance not only of evaluating the system’s impacts on equity, but of evaluating the equity metrics themselves.56

Detailed guidance for implementing those requirements states that the type of metric employed is important; it calls on the public sector entity making the purchase to determine appropriate metrics, rather than the technology vendor. In the end, the goal is for both parties to collaborate on determining the most appropriate metrics.57

Part II of this report illustrates that governments and other organizations want to put AI principles into practice, and many also want to find ways to produce quantifiable AI risk and analysis measures in the form of fairness scores or ratings. Yet, some of the literature referenced here reminds us that there are pitfalls inherent in quantifying fairness through one-size-fits-all assessments, encoded technical tools, or other quick technical fixes.

This section contains two use cases. The first spotlights an inappropriate use of metrics to automatically alleviate bias from disparate impacts of AI systems. The second highlights the use of SHAP and LIME, two related approaches intended to explain how AI systems produce particular outputs or decisions, both of which have attracted scrutiny among computer science researchers.

Use Cases in AI Fairness

The Risks of Using the US Four-Fifths Employment Rule for AI Fairness Without Appropriate Context

In the laudable mission to ensure that AI systems do not produce negative impacts on specific groups of people, an array of tools and metrics intended to remove disparate impacts from AI datasets and systems has emerged. Some of these tools use as their foundation encoded translations58 of a complex US rule: the “Four-Fifths rule.”59

The Four-Fifths Rule is well-known in the US labor recruitment field as a measure of adverse impact and fairness in hiring selection practices. Detailed in the Equal Employment Opportunity Commission Uniform Guidelines on Employee Selection Procedures of 1978,60 the rule is based on the concept that a selection rate for any race, sex or ethnic group that is less than four-fifths—or 80%—of the rate reflecting the group with the highest selection rate is evidence of adverse impact on the groups with lower selection rates. The rule has been widely applied by employers,61 lawyers,62 and social scientists63 to determine if hiring practices are lawful and if they result in disparate or adverse impacts against certain groups of people.

Employers with more than 100 employees are required to maintain information regarding disparate impact in hiring selection rates, according to the Uniform Guidelines.64 While the guidelines state that the Four-Fifths rule is “generally” regarded by federal enforcement agencies as evidence of adverse impact, it explains that in some cases, smaller differences in selection rate may constitute adverse impact, and in others, greater differences in selection rate may not constitute adverse impact. In other words, context matters.65

Despite its widespread use, legal, employment, and technical experts have cautioned against use of the Four-Fifths Rule as a singular means of assessing disparate impact.66 Many experts warn against simplistic applications of the rule, both within its historical use in US labor contexts as well as for its use in AI contexts.67

In June 2023, the chair of the U.S. Equal Employment Opportunity Commission cautioned against relying solely on meeting the 80% threshold. Calling the Four-Fifths rule “a check” and just one single standard used at the start of federal investigations, rather than the only measure used for gauging disparate impact, she said that “smaller differences in selection rates may constitute disparate impact.”68

Further, according to a U.S. Justice Department legal manual addressing disparate impact, “not every type of disparity lends itself to the use of the Four-Fifths rule, even with respect to employment decisions.”69 Legal scholars also have questioned the limits of the Four-Fifths rule, noting its failure to statistically reflect hiring disparity impact adequately.70

Despite those caveats, the Four-Fifths Rule and its 80% benchmark have been repurposed in computer code form and used in a variety of AI fairness metrics and tools.71 The rule is applied in both employment72 and non-employment contexts 73 as a means of measuring or “removing” bias or disparate impacts.74 It is also used outside of the US employment context and is encoded into AI governance tools offered in other jurisdictions. 75

In a 2019 study of 18 vendors offering algorithmic pre-employment assessments, researchers found that three vendors “explicitly mentioned the 4/5 rule” and several “claimed to test models for bias, ‘fixing’ it when it appeared.”76 A more recent review indicates this is still happening. As of August 2023, some companies providing AI software publicly mentioned the four-fifths rule as a basis for addressing disparate impact in their systems. 77

It is not known at this time how many of the entities and individuals using metrics or tools that incorporate the rule’s 80% benchmark are aware of the full background, context, and underlying rationale of the four-fifths rule as encoded in those tools. It is also unknown how many of those using the tools outside of a US employment context would continue using them if they were aware of the potential problems.

Scholarly researchers also argue that application of the rule in algorithmic recruitment systems is “coarse as it is agnostic to quality of candidates” and does not “account for uncertainties and biases in the data systematically.”78 Scholars also find that codifying the Four-Fifths Rule into AI fairness software should not be used in contexts outside hiring in the US or US labor law and compliance.79 Scholars also assert that tools intended to produce non-discriminatory AI systems that incorporate the Four-Fifths Rule may miss other important factors weighed in traditional assessments, such as which subsections of applicant groups should be measured using the rule.80

Meanwhile, some civil rights and employment lawyers argue that use of the Four-Fifths Rule rule as a test for disparate impact is unreliable in some cases81 and, particularly in relation to AI used in labor recruitment, “is not only unsupported by the case law, but it is also bad policy.” 82

Use Cases in Automating Fairness: A Compendium of Potential Risks

The movement toward establishing practices that create fairer AI outcomes is positive. However, scholarly literature reviewed for this report indicates an array of unintended consequences of applying metrics or other technical approaches to measure or improve AI fairness.

For example, attempting to de-bias AI systems by abstracting, simplifying and de-contextualizing complex concepts such as disparate impact is just one problematic approach emerging within the AI governance tool environment among many.

It is worth noting there are significant distinctions among definitions of fairness, which may complicate the efficacy of technical approaches designed according to one perception of fairness when used in other contexts.83 Some key concerns and potential problems:

“Fairness gerrymandering”:

“Fairness gerrymandering” in AI fairness tools is a term of art utilized in the scholarly literature to represent when algorithms that take fairness into account have the paradoxical effect of making their outcomes particularly unfair to one subgroup.84 Technically speaking, this occurs when “a classifier appears to be fair on each individual group, but badly violates the fairness constraint on one or more structured subgroups defined over the protected attributes (such as certain combinations of protected attribute values).”85

Fairness gerrymandering might occur if a method for achieving algorithmic fairness is applied in the context of only a small number of pre-defined groups. For example: when, in relation to two binary features corresponding to race and gender, a classifier is considered equitable if it corresponds to one combination of those binary features (such as if it corresponds to a “Black man,” or a “white woman”), but not another combination, such as “Black woman.”86 The risk is that an analytical process may result in unfairness in relation to other groups not explicitly considered. In other words, a process that creates more equitable outcomes for some groups might produce undesirable side effects for other groups.87

Abstraction traps, oversimplification, and lack of critical context:

Because “abstractions are essential to computer science, and in particular machine learning,”88 they are inherent in technical interventions that can create “abstraction traps” when used in societal contexts.89 The aforementioned abstraction of the four-fifths rule is just one example of an abstraction trap. Another such trap might result if an algorithm designed to solve a problem in one social setting, such as predicting risk of recidivism, is also used in relation to loan default. Such abstractions may “render technical interventions ineffective, inaccurate, and sometimes dangerously misguided when they enter the societal context that surrounds decision-making systems.”90

Also, in an effort to codify governance goals such as fairness and explainability, AI governance tools may lack critical context. Removing or reducing the proper context for an AI governance tool “may flatten nuance and suggest that the tools to solve complex problems lie within the confines of the kit,” or can “[abstract] away” crucial elements of the social context in which AI systems are deployed.91

For example, the NIST AI Risk Management Framework, an AI governance tool reviewed in Part II of this report, recognizes that metrics used to measure AI risk “can be oversimplified, gamed, lack critical nuance, become relied upon in unexpected ways, or fail to account for differences in affected groups and contexts.”92

Scholarly literature also addresses problems that result from application of formal mathematical models of “fair” decision-making used in policy, analyzing the potential for decision-making using algorithms to “violate at least one normatively desirable fairness principle.”93

Fairness and risk scoring model tradeoffs:

Maximizing fairness across different legally protected groups of people and also achieving maximal accuracy is a topic of intense scrutiny in the literature—because this is nearly impossible to accomplish. Research evaluating methods and metrics used to score or predict AI risk levels indicates the distribution of true risks may differ among groups, and in particular, may not be proportional to one another.

This could lead to unfair allocation or access to resources, among other potentially negative outcomes.94 This issue can apply when risk assessments are used by decision-makers across sectors. Examples include mortgage lending, employment, college admissions, child welfare, and medical diagnoses.While some risk scoring models are regulated, many others are not.

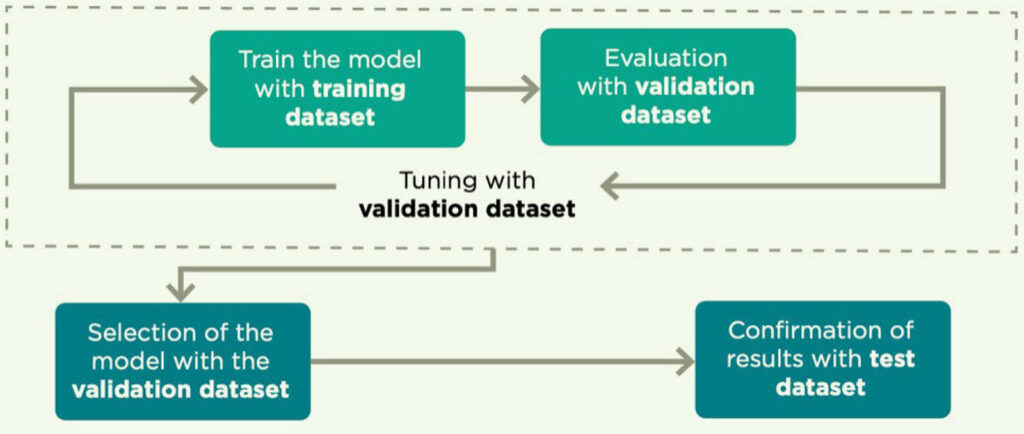

Limited applicability throughout AI life cycle:

Some AI governance tools or fairness AI auditing software may only apply during limited phases of the AI life cycle. For example, some AI fairness tools may only apply to the model training stage of AI development. While this is important, determining fairness at one life cycle stage does not mean that fairness is imbued thereafter through the AI life cycle.

For example, if models are adjusted post-deployment the fairness of their outputs can be affected negatively. In addition, some fairness tools do not support early stages of the ML development lifecycle, such as problem formulation stages.95 Also, there is a risk of AI fairness tools being applied to inappropriate use cases, misinterpreted, or misused.96

Limited applicability to third-party AI systems:

Third-party AI systems, including third-party software, hardware, and data components, among others, “may complicate risk measurement.”97 The inner workings of third-party AI systems are not always transparent to users. In addition, certain AI governance tools and metrics may not be applicable when attempting to assess third-party AI software or systems built using unstructured data, as opposed to structured data.98 99 The OECD notes the importance of understanding where, when, and what parts of an AI system are built in-house or by a third party, noting three configurations for how this might be operationalized.100

Regional contextual constraints: Off-the-shelf AI auditing software products or open-source governance tools may have been designed for use in specific countries that have high AI capacity.101 Because of this, these products do not always address concerns in all Asian, African, Caribbean, or Latin American jurisdictions, among others.

For instance, an AI governance tool may not recognize nuances among the large variety of Asian and African sub-populations, demographics, and languages. Masakhane Research Foundation, a grassroots organization based in Kilifi, Kenya, that is mentioned in Section II of this report,102 generates, curates, and annotates datasets that are inclusive of the languages people speak throughout the African continent.103

Singapore’s Generative AI Evaluation Catalogue104 also states that LLM evaluation techniques tend to be Western-centric, and should consider user demographics and cultural sensitivities. In addition, India’s Tamil Nadu State Policy for Safe and Ethical AI105 points to the importance of cultural relevance for AI governance tools. This work is deeply embedded within the regional AI and cultural contexts.

Lack of definitional consistency:

There are significant distinctions among definitions of fairness, which may complicate the efficacy of technical approaches designed according to one perception of fairness when used in other contexts.106

The difficulty of assessing fairness and privacy in AI systems:

Assessing AI model fairness may be in conflict with data minimization and data protection goals, as well as existing regulations in some circumstances. Measurement for disparate impacts against particular groups requires knowledge of sensitive attributes such as race or age, the very types of data attributes that some data governance regulations may restrict. AI fairness researchers have documented this phenomenon107 as well as introduced methods for measuring fairness while protecting sensitive data.108

It is important to note that this paradox is not present in all data ecosystems. For example, National Statistical Organizations (NSOs) operate under a derogation in most countries of the world, even where data governance legislation is present. There is a unique set of rules providing ethical guardrails for NSOs in precisely these kinds of analytical circumstances.109 In addition, there are many other exemptions for conducting analysis using sensitive data; for example, public health data during a national public health emergency is often treated more leniently because data protection rules may be suspended in certain emergency situations.110

Inspecting AI Explainability

Governments, corporations, and others using AI systems, along with those affected by these systems, want to understand how these systems make predictions and decisions. Through what is referred to as explainability, explicability, or interpretability, governments and others hope to illuminate the unseen aspects of AI systems.111

How AI Transparency, Explainability, and Interpretability Differ

Like many terms related to AI, discrepancies abound regarding the meanings of explainability-related terminology.112 Some policymakers have distinguished among meanings of the terms transparency, explainability, and interpretability. Research shows that knowing precisely how some AI systems produce outputs can be extremely difficult, even though the components of these systems can be made transparent for evaluation: such as the parameters or weights affecting how a model behaves113 or the datasets used to train and test a model.

Also, according to some definitions used in the AI policy sphere, there are nuanced differences between AI interpretability and explainability. These definitions suggest that interpretability is intended to satisfy the inquiries of end users or people affected by an AI system, possibly to facilitate some form of redress. Explainability, on the other hand, is the aim of technical practitioners attempting to describe the mechanisms that lead to AI system or algorithmic outputs, possibly to determine what is needed to adjust and improve them.114

AI explainability represents the capacity of an AI system to reveal how it arrived at a particular output, such as a decision, prediction or score. (For more details, see the sidebar on transparency, explainability, and interpretability.)

AI developers and practitioners are working to find ways to illuminate the inner workings of AI systems, some of which are becoming increasingly complex, such as neural networks.115 116 However, there is no consensus regarding whether it is possible to achieve genuine AI explainability or interpretability.117 Nevertheless, some scholarly literature indeed shows that AI models that are designed to be interpretable are possible, and that in some cases, such as situations involving high-stakes decisions, “interpretable models should be used if possible, rather than ‘explained’ black box models.”118

Some literature goes further still, cautioning against the emphasis on AI explainability goals, suggesting that demands for AI explainability “nurture a new kind of ‘transparency fallacy.’”119

In addition, some AI auditing experts doubt rhetoric suggesting that some AI systems are too densely complicated to be explained, and suggest that with additional transparency, “the mystery disappears.”120

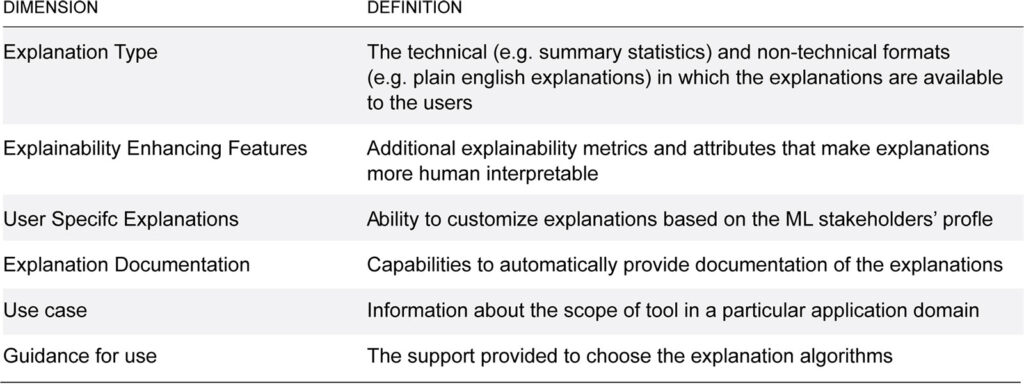

The research for this report identified specific questions and concerns related to AI explainability and interpretability detailed in the literature. Here, we highlight a specific use case involving SHAP and LIME, two related approaches intended to explain how AI systems produce particular outputs or decisions, both of which have attracted scrutiny among computer science and AI researchers.

In addition, later in this section, we discuss scholarly literature addressing the limits and unintended consequences of explainable AI methods such as risk of manipulation and inappropriate applications.

SHAP and LIME: Popular but Faulty AI Explainability Metrics

In the absence of widely-adopted AI explainability standards, two approaches—SHAP and LIME—have grown in popularity, despite attracting an abundance of criticism from scholars who have found them to be unreliable methods of explaining many types of complex AI systems.121

Use of both SHAP122 and LIME123 has increased in part because they are model agnostic, meaning they can be applied to any type of model that data scientists build. An abundance of accessible and easy-to-use documentation related to the two methods has also fostered interest in them.124